You know those parents that love their child so much, they do not see how bad their child in reality really is. I think you have to have a similar relationship to GNU/Linux if you really want to use it as your desktop operating system. That does not mean, that it is generally bad. Just like the child it has its strong points. E.g. the kernel. I am an avid fan of Linus Torvals autocratic management of kernel development. And have no doubt, it is autocratic. He decides in which direction the kernel moves and the success the kernel has had, is in my opinion, largely caused by his pragmatic style.

operating system. That does not mean, that it is generally bad. Just like the child it has its strong points. E.g. the kernel. I am an avid fan of Linus Torvals autocratic management of kernel development. And have no doubt, it is autocratic. He decides in which direction the kernel moves and the success the kernel has had, is in my opinion, largely caused by his pragmatic style.

One could say that the success of the kernel part of GNU/Linux was caused by his strong leadership. And in areas where the operating system does less well, there is a lack of leadership.

For example the window managers. Mainly there is KDE and Gnome. They have different UI frameworks and it is already kind of sacrilegious to use a KDE application in Gnome, because it uses more memory. But additionally to that, this application won’t have the same style.

Of course they have different systems for start menu entries, tray icons, settings and pretty much everything you can think of. Thankfully there is kind of a standardization body named FreeDesktop.org. The problem – as with every standardization process – is that it moves slowly and the resulting standard does not define all useful scenarios. Thus the new features are sometimes still not accessible in a common way.

We speak here of a fragmentation within the operating system: In order to make a GNU/Linux application which uses UI and has a native look&fell you need to do everything twice now. Once with GTK and once with Qt (used by KDE).

But this does not end there: You have to think of the zillion other window managers out there. XFCE, Unity, Fluxbox you name them. Thankfully most are based on either GTK or Qt. Nevertheless: In each one of those, your application may not display its tray icon correctly.

And as you perhaps know: The UrBackup Client displays a tray icon.

Anticipating all these complications I am using a cross platform toolkit for the UrBackup Client: wxWidgets. Theoretically it is available for both Qt and GTK. As every level of abstraction this gives you slightly less power, but the application is simple right?

Well, try to show such a balloon popup on windows and we can talk. But otherwise it really worked mostly well.

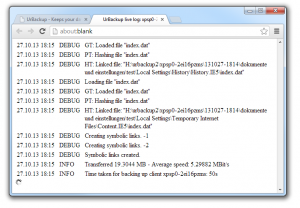

So I compiled the client in Debian and checked if everything was working. And it did. Then – to test it in a more popular desktop distribution – I downloaded Ubuntu.

The tray icon did not show up. Turns out Unity has a whitelist of apps that can show tray icons. This annoys many users as e.g. Skype won’t show up any more. You can of course allow tray icons by editing some arcane setting somewhere. But this is not something the end-user should do right?

But it gets worse: After I edited that setting to allow all applications, it still did not work.

Turns out they did not like the FreeDesktop.org standard any more and made their own. In order for it to work I would have to use a separate library (libappindicator) to display the tray icon. Libappindicator only works with Unity on Ubuntu, so I would have to make and release a different version of my application for Ubuntu. Not acceptable.

I’ll repeat: I’m using libwxgtk2.8 which is officially part of Ubuntu to display a tray icon. This does not work because wxWidgets uses the FreeDesktop.org protocol to display the tray icon which Ubuntu decided to abandon. The wxWidget guys (understandably) seem to not want to fix that issue in wxWidgets 2.9, probability because they do not want to implement something only for Ubuntu, as well.

Simultaneously the Ubuntu fork Mint which does not use Unity is becoming more popular. So perhaps this specific problem will resolve itself this way. This issue certainly seems to have caused some waves: http://blogs.gnome.org/bolsh/2011/03/11/lessons-learned/

Bottom line of that article is that the FreeDesktop.org standardization process is broken. And this is just one example of the kind of fragmentation we developers have to think about.

Compare that to Windows where the program that displayed tray icons in Windows 95 probably still can display it in Windows 7. After 11 years! Too easy.

I said at the beginning that a strong leadership is needed just like for the Linux kernel. This strong leadership would have to coordinate efforts in different window managers and in different distributions. This is difficult because the distribution is the thing the users sees and holds responsible for something that is not working. This is also the reason why you install packets from your distribution. Because doing it any other way probably causes something to not work or even break. Because only distributors are accountable it is very difficult to establish something like FreeDesktop.org – an inter distribution standardization body. There is simply no incentive to play along nicely, especially since standardization processes tend to be lengthy and difficult and you want your distribution to be progressive and modern.

The only way I see this dilemma could be solved, is by having one distribution which the majority of GNU/Linux (desktop) users use. I hoped this could be Ubuntu lead by Mark Shuttleworth. But Ubuntu is sadly moving into the wrong direction at the moment.

Additionally to that. It is moving too fast. Given some time and persuasion Gnome probability would have adapted the libappindicator interface and I would not have this problem now.

Unity is – in my opinion – really crappy. I did not even find out how to switch applications without alt+tabbing and had to use the windows key to start one (start menu where are you?). If this is someone’s idea of usability and end user friendliness then I give up all remaining hope for Ubuntu.

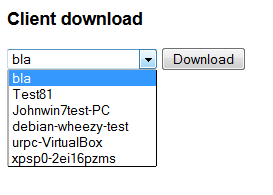

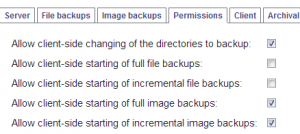

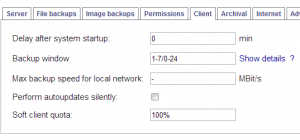

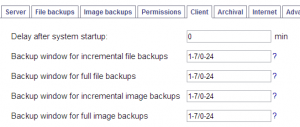

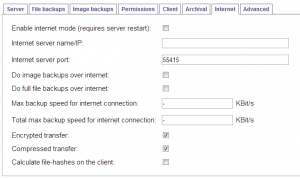

Given all that, I have decided to not start building any packages for Ubuntu/Debian. The support matrix would just be too great and I already named one issue. If you really love Linux that much that you use it as a desktop operating system, I leave you to grab the source code and build it yourself – no guarantees that it works on your specific distribution in your window manager. Thankfully the back-end part – the part that does the backups – is not dependant on any flaky UI/Window manager stuff and so should be there to stay. If the frontend does not work for you (aka it does not display the tray icon) you can always set the directories it backs up on the server.

I hope that some time in the future someone from a distribution picks up that code and builds working packages for that distribution. But that someone won’t be me. This far, and no further! Sorry, Linux. I will still love you. But only as my server child. Not a desktop one.