I’m releasing UrBackup Server 0.26.1 and Client 0.40.1 soon. They do have only minor bug fixes and additionally a Russian translation.

The next major version, which will probably be 1.0, will have following new features:

First of all you will be able to start and stop backups from the server web interface.

First of all you will be able to start and stop backups from the server web interface.

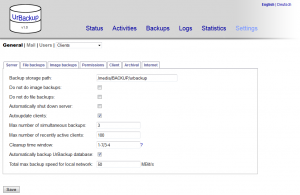

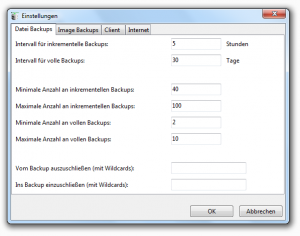

Then I reorganized the settings, both on the server web interface and on the client. You can also see the new bandwidth throttling feature which can limit the bandwidth usage of the backup server, both globally and for each client.

Then I reorganized the settings, both on the server web interface and on the client. You can also see the new bandwidth throttling feature which can limit the bandwidth usage of the backup server, both globally and for each client.

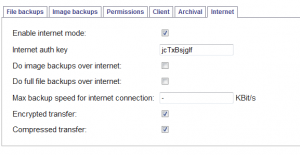

I added a few  features to the new internet mode, described in the last post. Per default UrBackup does not do full file backups or image backups with an internet connection, but it can be enabled. Total global backup speed and backup speeds for each client can be set separately from the local backup speed. You can e.g. use this on the client to prevent UrBackup from using all your bandwidth

features to the new internet mode, described in the last post. Per default UrBackup does not do full file backups or image backups with an internet connection, but it can be enabled. Total global backup speed and backup speeds for each client can be set separately from the local backup speed. You can e.g. use this on the client to prevent UrBackup from using all your bandwidth . Additionally to being able to encrypt the transfer over internet UrBackup can now also compress it.

. Additionally to being able to encrypt the transfer over internet UrBackup can now also compress it.

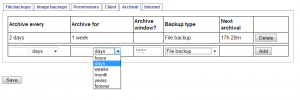

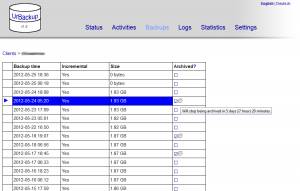

There is a new feature which lets you archive certain  backups in certain intervals. Archived backups are not deleted during cleanups, until they are not archived anymore. Additionally to the automated archival you can also manually archive and un-archive certain file backups simply by clicking on them. For now only file backups can be archived.

backups in certain intervals. Archived backups are not deleted during cleanups, until they are not archived anymore. Additionally to the automated archival you can also manually archive and un-archive certain file backups simply by clicking on them. For now only file backups can be archived.

This should be the major improvements. The are some minor ones as well.

Everything except the internet mode is ready for testing, so if anyone wants to help send me a mail at martin@urbackup.org or drop by in the forums and I will upload the appropriate builds.