I’ve got a Xen server which runs a couple of Linux and Windows VMs. The VMs are stored in LVM volumes on a LVM volume group which is on a bcache device. The bcache device consists of a mirrored SSD pair (using mdraid) as cache and a mirrored HDD pair (also using mdraid) as backing storage. The SSD caching gives a nice performance boost, but nowadays I would go with SSD storage only, because bcache caused some problems (did not play nice with udev during boot).

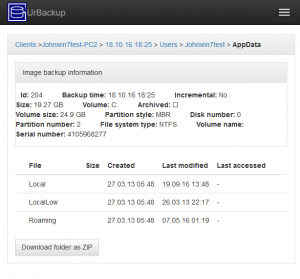

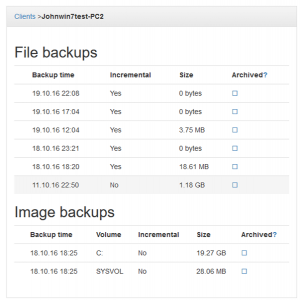

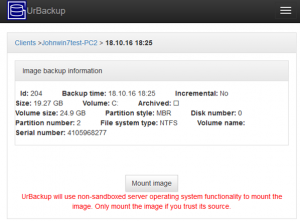

The Windows VMs are backed up by installing the UrBackup client in the VMs. To restore I’d need to boot the restore CD in Xen or restore the Windows images via command line in the hypervisor.

The Linux VMs are backed up at hypervisor level in the Xen dom0 (which is Debian in this case) using LVM snapshots. To create and remove LVM snapshots I have following snapshot creation and removal script (the volume group on which the volumes are is mirror-vg).

Snapshot creation script at /usr/local/etc/urbackup/create_filesystem_snapshot:

#!/bin/bash

set -e

SNAP_UID=$1

VOLNAME="$5"

VGNAME="mirror-vg"

if [[ $VOLNAME == "" ]]; then

echo "No volume name specified"

exit 1

fi

if [[ $VOLNAME == "other-data" ]]; then

VGNAME="data2-vg"

fi

if [[ $SNAP_UID == "" ]]; then

echo "No snapshot uid specified"

exit 1

fi

export LVM_SUPPRESS_FD_WARNINGS=1

lvcreate -l100%FREE -s -n $SNAP_UID /dev/$VGNAME/$VOLNAME

SUCCESS=0

trap 'test $SUCCESS = 1 || lvremove -f /dev/$VGNAME/$SNAP_UID' EXIT

mkdir -p /mnt/urbackup_snaps/${SNAP_UID}

mount -o ro /dev/$VGNAME/$SNAP_UID /mnt/urbackup_snaps/${SNAP_UID}

SUCCESS=1

echo "SNAPSHOT=/mnt/urbackup_snaps/$SNAP_UID"

exit 0

Snapshot removal script at /usr/local/etc/urbackup/remove_filesystem_snapshot:

#!/bin/bash

set -e

SNAP_UID=$1

SNAP_MOUNTPOINT="$2"

if [[ $SNAP_UID == "" ]]; then

echo "No snapshot uid specified"

exit 1

fi

if [[ "$SNAP_MOUNTPOINT" == "" ]]; then

echo "Snapshot mountpoint is empty"

exit 1

fi

if ! test -e $SNAP_MOUNTPOINT; then

echo "Snapshot at $SNAP_MOUNTPOINT was already removed"

exit 0

fi

if ! df -T -P | egrep "${SNAP_MOUNTPOINT}\$" > /dev/null 2>&1; then

echo "Snapshot is not mounted. Already removed"

rmdir "${SNAP_MOUNTPOINT}"

exit 0

fi

if lsblk -r --output "NAME,MOUNTPOINT" --paths > /dev/null 2>&1; then

VOLNAME=`lsblk -r --output "NAME,MOUNTPOINT" --paths | egrep " ${SNAP_MOUNTPOINT}\$" | head -n 1 | tr -s " " | cut -d" " -f1`

else

VOLNAME=`lsblk -r --output "NAME,MOUNTPOINT" | egrep " ${SNAP_MOUNTPOINT}\$" | head -n 1 | tr -s " " | cut -d" " -f1`

VOLNAME="/dev/mapper/$VOLNAME"

fi

if [ "x$VOLNAME" = x ]; then

echo "Could not find LVM volume for mountpoint ${SNAP_MOUNTPOINT}"

exit 1

fi

if [ ! -e "$VOLNAME" ]; then

echo "LVM volume for mountpoint ${SNAP_MOUNTPOINT} does not exist"

exit 1

fi

echo "Unmounting $VOLNAME at /mnt/urbackup_snaps/${SNAP_UID}..."

if ! umount /mnt/urbackup_snaps/${SNAP_UID}; then

sleep 10

umount /mnt/urbackup_snaps/${SNAP_UID}

fi

rmdir "${SNAP_MOUNTPOINT}"

echo "Destroying LVM snapshot $VOLNAME..."

export LVM_SUPPRESS_FD_WARNINGS=1

lvremove -f "$VOLNAME"

The snapshot scripts are specified via the file /usr/local/etc/urbackup/snapshot.cfg:

create_filesystem_snapshot=/usr/local/etc/urbackup/create_filesystem_snapshot

remove_filesystem_snapshot=/usr/local/etc/urbackup/remove_filesystem_snapshot

volumes_mounted_locally=0

Then I have a virtual client for each LVM volume that needs to be backed up. I have put those virtual clients in a settings group with the default path to backup “/|root/require_snapshot”.

For restore I need to recreate the LVM volume. Create a file system on it (e.g. with mkfs.ext4) mount it in the hypervisor and then restore via.

urbackupclientctl restore-start --virtual-client VOLUMENAME -b last –map-from / --map-to /mnt/localmountpoint